Sun Rays are Sun Microsystems (now Oracle) thin clients and they basically consist of a

Sun Ray server (which is actually not a particular type of hardware but a piece of software which can run on various platforms. In this article

Sun Ray server will refer to both the machine and the software, the context should make it clear.) and the respective

Sun Ray clients (which used to be a particular device which came in various flavours and later was complemented by a soft client in order to give e.g. notebook or tablet users a virtual option).

When users are logging into the Sun Ray server the respective entries in utmpx and wtmpx need to be created and there is a distinction between logins coming from a Sun Ray client (hard or soft) or other sources (e.g. console or remote logins).

In the next two articles I will take a deeper look into the wtmpx entries created by Sun Ray clients.

How to identify a Sun Ray client login

When looking at the members of

'struct futmpx' in /usr/include/utmpx.h there are two entries which identify a Sun Ray client login.

FIrst of all

ut_line is set to

dtlocal and secondly the

ut_host entry contains the name of the

DISPLAY i.e. a colon and a number e.g.

':21'.

An entry in wtmpx is created for each login but also for each logout action.

Aside from user name, date and time etc. the login entry is identified by

ut_type being set to

7 (USER_PROCESS) and the corresponding logout enty has ut_type set to

8.

Here is the example of a login/logout pair of lines for user 'joes' but of course these lines are not adjacent since many more entries have been happening after the login (the example is the output as shown by fwtmp).

joes dt6q dtlocal 40881 7 0000 0000 1164719643 0 0 4 :21 Tue Nov 28 14:14:03 2006

joes dt6q dtlocal 40881 8 0000 0000 1167177826 0 0 4 :21 Wed Dec 27 01:03:46 2006

i.e. user 'joes' logged in on Nov 28 with process id 40881 and his DISPLAY was assigned ':21'.

He logged out again about one month later.

There are a couple of cases when no corresponding logout entry can be found in wtmpx: the server crashed unexpectedly (quite rare) or the admins have set up regular backup and re-init of wtmpx files (very often retained as wtmpx.1, wtmpx.2 a.s.o.) so the login entry would be in another file than the logout entry.

The code in this article will assume that corresponding login/logout pairs can be found in one file.

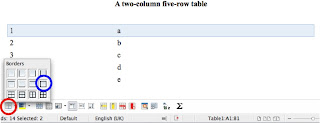

My code will now scan wtmpx for all login/logout pairs of Sun Ray entries and create a data file which can be used by gnuplot to visualize the findings.

The graph will show the timeline on the x-axis and the DISPLAY numbers on the y-axis.

A login/logout pair will be represented by a vector which starts at login time and ends at logout time, the length of the vector being the duration of the session.

The example above does look like this.

joes 21 20061128141403 20061227010341 682.83

It contains the username, the DISPLAY number, start and end time and the duration in hours.

The graph would be this:

And here is the

gnuplot code:

set title 'wtmpx - Example'

set key off

set grid

set ylabel 'Display number'

set ytics nomirror

set yrange [0:30.5]

set timefmt "%Y%m%d%H%M%S"

set xlabel "Date"

set xdata time

set xrange ['20061101000000':'20070101000000']

set format x "%Y\n%m/%d"

set terminal png small size 600,300

set output "Example.png"

set style arrow 1 head filled size screen 0.01,20,65 ls 1 lc rgb "red"

plot '...filename...' using 3:2:($5*3600):(0) with vectors arrowstyle 1

Almost the same code will be used to create the graphs for many user sessions over a longer period of time.

Create the gnuplot data file out of wtmpx

wtmpx will require data files with entries like this:

tmnsn 30 20060403075755 20060410153215 175.57

tt12339 79 20060412085413 20060412180126 9.12

nm8720 8 20060412095225 20060412180421 8.20

rr13447 84 20060412141539 20060412183617 4.34

oo2006 101 20060412085250 20060412201402 11.35

zpowv 53 20060403091259 20060413010213 231.82

Using the Perl module Convert::Binary::C (which I discussed in a previous article) and the knowledge about which wtmpx entries are Sun Ray entries and which can be skipped the following code creates a valid data file.

After declaring the Convert::Binary::C settings (as in my previous wtmpx blog) the while loop which reads the entries follows this logic:

it stores login entries in some data structures.

When a corresponding logout entry is found a line of data is printed.

When a system reboot entry is found all currently stored login entries will transformed into data using the reboot time as the time of logout for all entries.

After having read the complete wtmpx file the remaining login entries correspond to sessions which are still active. They will be transformed into data lines too.

use strict;

use Convert::Binary::C;

my $utmpxh = "utmpx.h"; # include file

# two OS specific settings

my $struct = "futmpx";

my $wtmpx = "/var/adm/wtmpx"; # on Solaris

my $c = Convert::Binary::C->new(

Include => ['/usr/include', '/usr/include/i386-linux-gnu'],

Define => [qw(__sparc)]

);

$c->parse_file( $utmpxh );

# Choose native alignment

$c->configure( Alignment => 0 ); # the same on both OSs

my $sizeof = $c->sizeof( $struct ); # on Solaris (=372)

$c->tag( $struct.'.ut_user', Format => "String" );

$c->tag( $struct.'.ut_line', Format => "String" );

$c->tag( $struct.'.ut_host', Format => "String" );

my %start; # hash to store session start times per display

my %user; # hash to store session user names per display

open(WTMPX, $wtmpx) || die("Cannot open $wtmpx\n");

my $buffer;

# Read wtmpx line by line

while( read(WTMPX, $buffer, $sizeof) == $sizeof ) {

my $unpacked = $c->unpack( $struct, $buffer); # Solaris

# We need these 5 members of 'struct futmpx'

my $ut_user = $unpacked->{ut_user} ; # user name

my $ut_line = $unpacked->{ut_line} ; # looking for 'dtlocal'

my $display = $unpacked->{ut_host} ; # display name like ':52'

my $ut_type = $unpacked->{ut_type} ; # type of entry 7=login, 8=logout

my $epoch = $unpacked->{ut_tv}->{tv_sec}; # the timestamp of the entry in UNIX time

# If a system restart happens then all previous sessions should be

# printed and variables re-initialized

if($ut_line eq "system boot" || $ut_line eq "system down" ) {

foreach my $disp (keys %start) {

print_row($disp,$epoch);

delete $start{$disp};

}

}

# Skip any entry which is not 'dtlocal'

next if( $ut_line ne "dtlocal" );

# Login entries

if($ut_type==7) {

# Set the start time and user for 'display' session

$start{$display} = $epoch;

$user{$display} = $ut_user;

}

# Logout entries

if($ut_type==8) {

# Check if a corresponding and valid 'login' entry exists

if($start{$display} && $user{$display} eq $ut_user && $start{$display}<=$epoch) {

print_row($display,$epoch);

# After printing a line the 'display' can be reused

delete $start{$display};

delete $user($display};

}

}

}

close(WTMPX);

# What is left now are all sessions which are still running

my $epoch = time();

foreach my $disp (keys %start) {

print_row($disp,$epoch,"ongoing");

delete $start{$disp};

}

exit 0;

# Convert epoch time to string

sub epoch_to_date {

my ($epoch) = @_;

my ($seconds, $minutes, $hours, $day_of_month, $month, $year, $wday, $yday, $isdst) = localtime($epoch);

# return something like 20060428091531 = April 28 2006 09:15:31

return sprintf("%04d%02d%02d%02d%02d%02d",

$year+1900, $month+1,$day_of_month,

$hours, $minutes, $seconds,

);

}

# Print a data entry

sub print_row {

my ($disp,$epoch,$ongoing) = @_;

# Session duration in seconds

my $duration = $epoch - $start{$disp};

# Remove the colon in 'display'

(my $d = $disp) =~ s/://;

# Set 'end' to either the real end time of the session or to 'ongoing'

my $end = epoch_to_date($epoch);

$end = $ongoing if($ongoing);

# Now print the line

printf "%-10s %4s %6s %s %s %.2f\n",

$user{$disp}, $d, epoch_to_date($start{$disp}), $end, $duration/3600;

;

}

With data files now in place it's time to create some graphs in the next blog.